Checking in with the system at mid-semester

This is a repost from Grading for Growth, my blog about alternative grading practices that I co-author with my colleague David Clark. I post there every other Monday (David does the other Mondays) and usually repost here the next day. Click here to subscribe and get Grading for Growth in your email inbox, free, once a week.

Last week David wrote about how to handle mid-semester evaluation of students. It turns out professors can be evaluated at mid-semester as well, and in fact I’ve written elsewhere that doing so is a really good idea. In addition to giving my own brand of frequent feedback surveys to my Discrete Structures for Computer Science students at three-week intervals this semester, last week I had a Mid-Semester Interview on Teaching (“MIT”) conducted by our Faculty Teaching and Learning Center.

Because David and I are not just pundits but actual instructors working out the day-to-day details of alternative grading systems with actual students — I thought it might be helpful, especially for newbies or those who are curious, to see what my students are actually thinking about my grading system and how I intend to make halftime adjustments to amplify what’s working and adapt to address their concerns.

What is an MIT?

In an MIT, a trained facilitator comes in, kicks me out of the room, then gets students into groups and asks them to respond individually to two questions:

- What are the major strengths of this course? What is helping you learn?

- What changes would you make in this course to assist you in learning?

Then students get into small groups and share their answers together and on the whiteboard. Then there’s a full-class discussion about what they said.

I find these MIT’s to be the most valuable thing I can do to see what’s really working in my classes and to surface potential problems. It’s especially helpful for students, since the discussions that take place tend to put their concerns in context. A student might think that “lots of people” are concerned about something in the class, but after discussions, it turns out that it was just a couple of people. And the results come early enough that I can make meaningful change in the course. MIT’s plus four rounds of my five-question summary data make course evaluations basically obsolete. If your institution offers such a thing, schedule one. If not, find a colleague and trade off doing DIY MIT’s for each other.

There was a lot to talk about with my class, not just the grading system, since I use a flipped learning approach and do some borderline-crazy things like teach students how to code in Python as part of the course. For this post, I’ll address only the stuff that students said about the grading system.

Recap of the system

Here’s my syllabus for the course. The grading system starts on page 3. In a nutshell:

- There are three main kinds of assignments in the course: Daily Prep which contains recorded lectures and pre-class exercises used in the flipped structure; Learning Targets which are basic skills, assessed in three possible ways (quizzes, oral quizzes in office hours, or videos); and Weekly Challenges consisting of application and extension problems. Here’s the list of Learning Targets for the course. Here’s a sample Daily Prep, a sample Learning Target quiz, and a sample Weekly Challenge.

- All of these are graded “Satisfactory/Unsatisfactory” using specifications that I set up in advance. For what follows, note that Weekly Challenges consist of multiple linked problems and the entire thing is graded as one unit, rather than each problem being graded separately. Learning Target quizzes are the opposite — each quiz contains a separate problem for each Learning Target, and students pick and choose which ones they want to try at each quiz (given once every two weeks, and more frequently than that near the end of the semester). Then each problem/target is graded separately.

- Of the 20 Learning Targets in the course, eight of them are listed as Core targets which means they are the essential core skills of the class. By doing work on quizzes, or oral exams in the office, or videos — or some mixture of these — students demonstrate skill on the targets. Demonstrating skill on a Learning Target on two separate occasions means the student is fluent on that target.

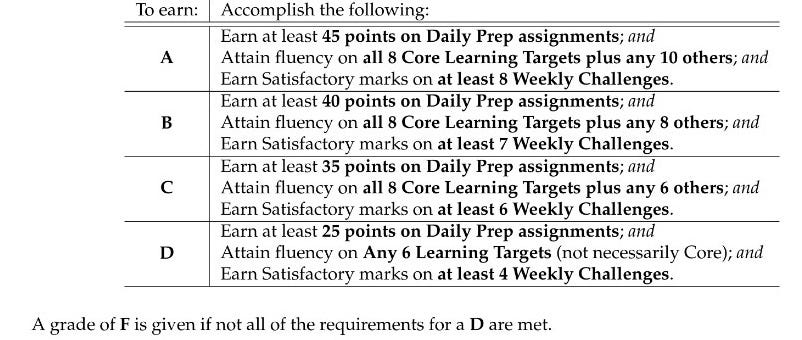

- To earn a course grade, students level up through various thresholds of accomplishments, summarized in this table from the syllabus:

Daily Preps do actually use points, a concession I made this time around to keep things simple. Each one is worth two points based on the outcome of pre-class work and the outcome of a group quiz at the start of class. There are 27 Daily Preps (total of 54 points possible); 20 Learning Targets, 8 of which are Core; and 10 Weekly Challenges.

There is also a system of tokens, a common feature of specifications grading. These are fake currency that can be used to bend the course rules. For example any deadline can be extended 24 hours by spending a token. Everyone starts with 5 of these and there are occasional opportunities to earn more.

And there is a system of revision and resubmission for everything other than Daily Prep. Learning Targets can be done and redone as many times as needed in any combination of the three methods (paper quiz, oral quiz, or video) students want. Weekly Challenges can be revised and resubmitted as often as needed — up to two revisions per week (three if you spend a token), and subject to last-chance deadlines for the early Weekly Challenges. Nothing is penalized; if you do work that isn’t up to specs, just do it again after studying the feedback.

Aside: What kind of grading is this?

One thing to point out parenthetically here is that I think my system here goes to show that mastery-based grading can be a mix-and-match of different approaches. What I have here is heavily influenced by specifications grading. But some of the actual grading, particularly on Weekly Challenges, is basically ungrading — I do put a grade on student work but only as a marker to let them know whether their work is “good enough yet” or not. The main action is in the feedback loop that takes place. And there are probably undertones of other grading approaches here as well. I don’t focus so much on the name of the thing I am doing, as much as on the underlying concepts of the thing itself. If you’re new, or curious, I think that can be quite freeing.

What students say about this after 7 weeks: The good

Here’s what my 24 students said helps their learning, specific to the grading system:

- The fact they get many attempts at each assignment is, to them, “incredibly helpful”.

- They also found that feedback helps them. In some ways that seems like a funny and obvious thing to say — I mean, of course feedback helps, doesn’t it? How could it hurt? But then I think about the many times that I, as a student, got feedback that was not only unhelpful but downright mean spirited — or the many more times that I got nothing at all but a number or a letter.

- They also said that I give “fairly precise feedback”. When pressed on what “fairly precise” means and how I might give more precise feedback, nobody offered any suggestions. But actually I think “fairly precise” is the right thing to aim for. Feedback should be specific, but it also shouldn’t tell students exactly what they need to do next, at the level of “Change this sentence to say this instead…” My feedback tends to point out the exact spot where more work needs to be done and then give questions to think about.

- On that last point, students suggested that a face-to-face meeting to discuss feedback might be even more helpful. I couldn’t agree more, and that’s why I have three office hours a week. Sounds like I need to be clearer with students that they have the right to ask for this kind of feedback if it’s really helpful.

- Finally, students overall liked that the grading of Learning Target problems allows for two “stupid” (the specs call them “simple”) mistakes; that the entire system requires fluency (students like high standards if they are supported well); that it takes the stress off of grades and places it on learning instead; and generally all students feel it’s a better approach than traditional grading.

What students say about this system after 7 weeks: The not-as-good

But it’s not all sunshine and unicorns:

- Approximately half the class indicated frustration with the grading system. It seems to be coming from various places.

- Some wished for partial credit on Weekly Challenges. Others expressed concern about snowballing workloads as they are trying to revise old Weekly Challenges while new Weekly Challenges are coming in.

- Students expressed that “forcing 100% correctness” doesn’t give “much leeway” in demonstrating skill.

- And the Weekly Challenges — which focus not so much on computation but on problem solving, reasoning, and proof — are quite difficult because they are required to use general mathematical reasoning rather than just doing computations.

- Finally, students were a little confused on tokens. What are you supposed to do with them? Will we have chances to earn more?

I think all of these are legitimate concerns, and I probably would have some of these too if I were a student in my class. One thing I notice is that many of the concerns are not about the system itself but about how clearly (or not) the system is explained. For example, it’s not the case that “100% correctness” is required on anything and students even express that they know this because of the “stupid mistake” allowance. But the binary grading scale of Satisfactory/Unsatisfactory might lead a person to think so — the common interpretation of that scale is “all or nothing”. And the use of tokens and the opportunities for earning more are listed in the syllabus — do students know it’s there? For these concerns, I need to be clearer in my explanations to students and I will probably take 10-15 minutes this week to address those.

I also need to do better with explaining the “why” behind some of the assignments. There’s a simple reason there’s no partial credit on Weekly Challenges: The entire assignment operates as a unit, like an essay. And like an essay, you wouldn’t be “passed” if the intro and references are OK but the middle of the paper needs work. And the nature of Weekly Assignments needs to be better explained: These are not basic skills tests (that’s what the Learning Target quizzes are for) but opportunities to show one’s skill in overall mathematical reasoning. This is a core computer science skill; and my students have not often, or ever, been asked to work on it before. Explaining more clearly why they are being asked to do this week after week, including revisions, is on me.

Finally, I need to do better with helping my students manage the workload. Students raised a concern about snowballing workloads as past revisions collide with new assignments. But in fact, prior to this weekend, only about 15 revisions of previous Weekly Challenges had been submitted at all, over 48 students in two sections. So I wonder about that “snowball” effect. It’s definitely not coming from actual revisions. Maybe students are putting off revisions because of new work they have to turn in. If that’s the case, they should realize that most revisions are pretty minor and don’t take up a lot of time, and are very doable in a given week if you know how to budget time. Which is a very big if.

Finally, trust

When I was debriefing my MIT with the facilitator, the first thing she emphasized to me was actually not on the notes she took: Students expressed that they trust you. We could have stopped right there and I would have been very happy. When we talk about “getting buy-in”, we are really talking about trust. Making a system like this work with students requires that we earn their trust first — and I do mean “earn” since students do not necessarily come into a class trusting professors. If students had expressed they were with the grading system but that they didn’t fully trust me yet, that’s a red flag. But if they express trust, even if there are issues (and there are!) then we have a solid foundation for working those out.

And I think a core foundation for that trust is asking students what they think, taking what they say seriously, and making a good faith effort to adjust. After all, that’s exactly what we are asking them to do when we engage them in the kinds of feedback loops we are talking about in alternative grading systems.