On the care and handling of student ratings

The holiday season has two distinct meanings for college professors: it's a time of peace and relaxation as we take a break from the grind of work to enjoy time with family and friends; and it's a time of extreme anxiety as we get our student course evaluations for Fall semester. It seems like there is no other moment in the life of a college professor more anxiety-provoking – not job interviews, not being evaluated for tenure – than when our course evaluations arrive after grades are turned in.

I only taught one course this Fall due to reassigned time for being department chair. It didn't go well, and I knew that my evaluations weren't going to be great. And I was right. After I read them, I tweeted this:

Got my student evals today for the class I taught this fall. They were not pretty, in fact among the lowest numerical results I've received in many years. Why bring this up? To say that we all need work & don't feel shame if you did poorly, just reflect and get better.

— Robert Talbert (@RobertTalbert) December 19, 2019

This has become one of my most "liked" tweets of all time, and I've been thinking about the resonance that this concept has had – that a person with 20+ years of experience in teaching, who gives workshops on teaching, who wrote a book on teaching for goodness' sake, can have bad evaluations. So I have some thoughts about student evaluations based on this reflection and some of the questions I was asked in the replies.

Student evaluations are not evaluations

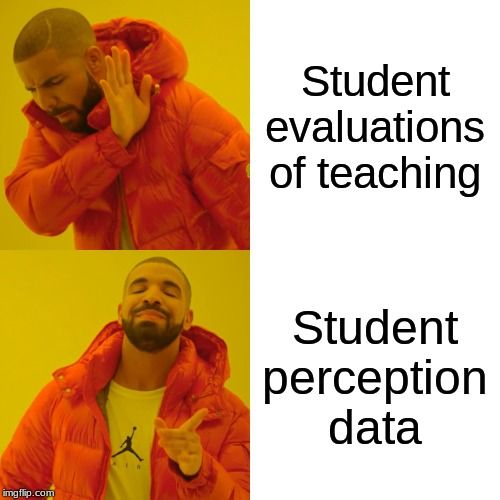

An "evaluation" of something implies a systematic examination of that thing, based on comparisons to approved standards, conducted by evaluators who have training and experience in making those comparisons. Whatever "student evaluations of teaching" are, they are most certainly not true evaluations in this sense. So we should stop referring to them as such.

In my department, we've decided to simply start calling them what they really are: Self-reports of how students perceived their experiences in the course. The term we're using is student perception data.

Once you reframe "course evaluations" as student perception data, it immediately holds "evaluations" up to the light, allowing you to interpret them properly. Possibly some of the awful baggage of "course evaluations" falls away.

Student perception data have value

This isn't to diminish the value that student perception data can have in actually evaluating our teaching. (That's a job that we do for ourselves and our colleagues do for us in the form of merit review and personnel actions.) Student perception data on a course is qualitative information that can reduce uncertainty about how the course was designed and conducted. They are data in other words – qualitative data to be precise – and we can use them to pick out messages that our students are sending to us.

Of course – as we know all too well – there are numerical items on these forms, and so there is a quantitative angle on student perception data. But this shouldn't distract from the overall fact that the data are self-reports on individual student perceptions. Therefore, like all qualitative (or mixed-methods) data, the results need to be handled with care and with a holistic viewpoint.

So how do we handle them?

How to handle your own student perception data

How to interpret student perception data is a complex question and I won't attempt to be exhaustive here. (This research article by Angela Linse is really good for a deeper dive on this issue; we used it in the department to start our conversations about student ratings.) The following is just advice from my own experiences.

Don't do any of the following with student perception data:

- Feel shame over poor results. Always remember, teaching is a wickedly complex problem; nobody has it completely figured out; and any teacher can have a bad time in a course in any given semester. Sometimes, despite our best efforts, the magic just isn't there. Or maybe – due to external life issues, internal mental health concerns, or good old-fashioned burnout – we actually didn't give the course our best effort. Whatever the reason, the survey results do not define you.

- Compare yourself to other professors. As a corollary, you should never consider student perception data to be some sort of ordinal data where I'm a better teacher than you if my numbers are higher than yours – or if I had glowing verbal responses to post on Twitter and you didn't – or if a student gave me a nice thank-you note or gift at the end of the semester and none of your students did any such thing for you. Corollary: If you got a great student response that you want to post to social media, be mindful of others who might feel shamed by it.

- Fixate on a small number of highly negative responses. This is something you hear a lot – don't let the outliers skew your own perceptions of the data. Of course it's true, but also easier said than done. I wish I had a formula for making this easy, but I don't. Just try not to do it.

- Fixate on a small number of highly positive responses. There's an equal and opposite danger of hanging out to the really great, Twitter-worthy students responses as well. These are outliers just as much as the incredibly negative responses. Certainly enjoy the feeling you get from knowing you had a strongly positive impact on students; but also be skeptical of your own press.

Do this instead:

- Treat student perception data like any qualitative data. Remember this is not a Yelp review – it's data that need proper analysis in order for it to be meaningful. Review the data several times before analyzing it – not just once to get it over with. Read the verbal responses to look for patterns, clusters, and themes. Code the data according to the themes you are finding. Use the numerical results to triangulate the data and corroborate your coding. Interpret the results accordingly. If you're unused to this kind of analysis, there are a lot of great intro guides you can read that will really help.

- Look for the signal in the noise. In the end your job is to ask and answer the question: What messages are my students sending me? Fixating on the highly negative and highly positive outliers gets in the way of this. Being defensive about what students say is also a big danger. Instead, look for the information in the data. Some of those messages might not seem valid at first but hide the real message one or two levels below. For example, if a lot of students ask for no timed testing in the class, that seems absurd; so what are they really asking for? Perhaps this is a message that says We'd learn more if we did projects instead of tests or We'd learn more if we had smaller-stakes assessments rather than a few high-stakes ones. Don't accept the message you read at face value.

- Also ask: What worked? What was missing? What improvements will I make next time? When I've gotten bad results (like this time) I've always found it helpful to take a problem-solving approach. OK, so the course wasn't great. Why? Was there something I needed to start doing, or stop doing, or continue doing that would have helped? Answer those questions honestly and then make a plan for how to fix it next time.

How to handle student perception data as a department

I'll end this with some thoughts about student perception data from the standpoint of a department chair, overseeing annual review and promotion/tenure processes. These are my own beliefs and I don't speak for my department, although a lot of these are baked into our policies and my beliefs are shaped by those policies.

- Never use student perception data as the sole, or even the main source of information about a faculty member's teaching. Teaching, as I said, is a wickedly complex problem. It simply cannot be reduced to a set of data, or in some cases to a single number. To get an accurate picture of faculty teaching, you need more than just student perceptions. Use faculty self-evaluations, peer evaluations via class visits, faculty-initiated data collected through pre- and post-testing... Insist on using multiple sources of data for faculty evaluations and make it easy to include them.

- Never compare one faculty member to another based on student perception data. A mantra for our merit review process is the faculty member is their own control group. It's easy to fall into the trap of thinking or saying, "Prof. A teaches the same course as Prof. B and has better evaluations, so what's Prof. B's problem?" I was subjected to this kind of "analysis" myself in a previous job. It's unhelpful, un-scholarly, and frankly a pretty terrible thing to do to someone. Focus on the faculty member being evaluated – not their peers.

- Look at trends over time and how faculty respond to their data. Possibly more important than the data themselves is how the faculty member responds to their data. So Prof. C has a terrible semester and gets low student ratings. This does not define Prof. C. What does define Prof. C is what happens next. Do they take ownership, look for the signal, and work the problem? Do we see intentionality and improvement, no matter how slow or halting it might be? Or do we see defensiveness, dismissiveness, student-blaming, obstinancy? It's the response to the data that holds the real data, in some ways.

I hope this is helpful, especially for those of you – those of us! – whose teaching this semester wasn't what we'd hoped it would be, and for the peers of those folks whose job is to help them get better.